![]() ver the past several years, web applications have evolved from collections of simple HTML pages into highly scalable and interactive rich applications built using a variety of technologies. Designing and developing these applications is complex. In addition, decision makers are increasingly seeking to build even more rich interactive capabilities into such applications while still maintaining or improving their performance. But high performance comes at a cost. To build web applications that deliver a solid end user experience, developers need to address the potential performance bottlenecks.

ver the past several years, web applications have evolved from collections of simple HTML pages into highly scalable and interactive rich applications built using a variety of technologies. Designing and developing these applications is complex. In addition, decision makers are increasingly seeking to build even more rich interactive capabilities into such applications while still maintaining or improving their performance. But high performance comes at a cost. To build web applications that deliver a solid end user experience, developers need to address the potential performance bottlenecks.

This article focuses on caching—an imperative for delivering high performance applications—and also briefly touches on compression. Several companies produce and sell specialized compression and performance products. This article seeks to simply describe the things that developers can build into their applications at both the client and server levels before seeking specialized products to solve performance problems.

Performance Bottlenecks

The performance bottlenecks are primarily high latency, congestion, and server load. Caching can’t address all three problems, but with careful design considerations, caching can improve performance. You can cache content at both the server and the client levels. It turns out that, on average, downloading HTML requires only 10 to 20 percent of the total end user response time; the other 80 to 90 percent is spent downloading all the other components in the page. Such components typically include images such as company logos, which don’t change from page to page, yet must be downloaded each time a user requests a page containing that logo. Caching the logo can avoid several roundtrips to the server.

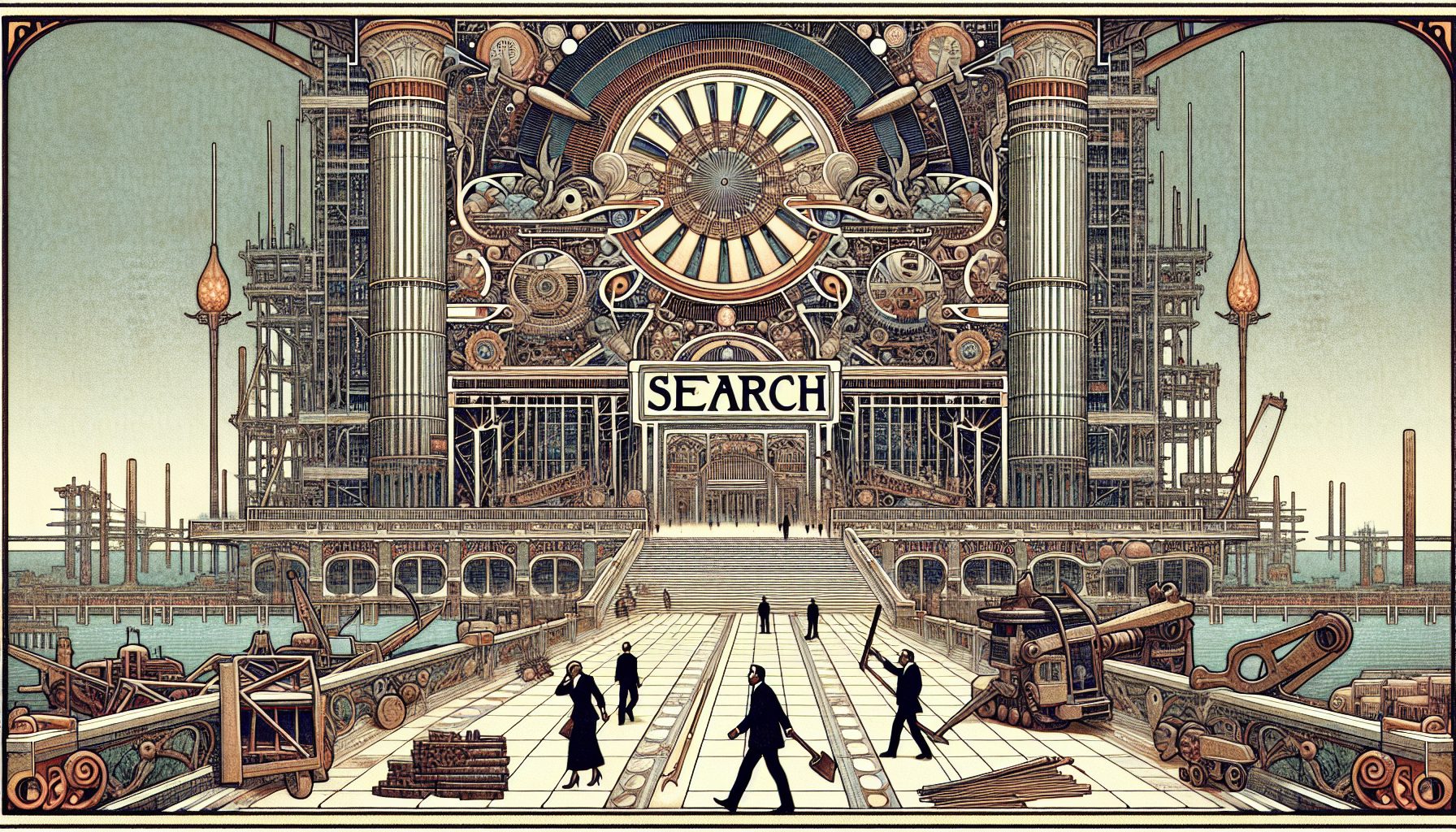

| |

| Figure 1. Caching on the Internet: The diagram shows a typical request along with the opportunities for retrieving cached information. | |

Simply put, cache is temporary storage. It replicates data on a different computer or in a different location than the original data source. With the right configuration, access to cached data access is faster than access to the original data. Using cached data also reduces server load and bandwidth consumption, resulting in enhanced performance from an end user’s point of view.

Both freshness and validation can be determined using a combination of HTTP request and response headers:

- Freshness determines whether an object can be served from the cache. You control it using expires and cache-control:max-age headers.

- Validation determines whether an object has become stale. You control it using last-modified and if-modified-since headers.

Designing Highly Cacheable Web Applications

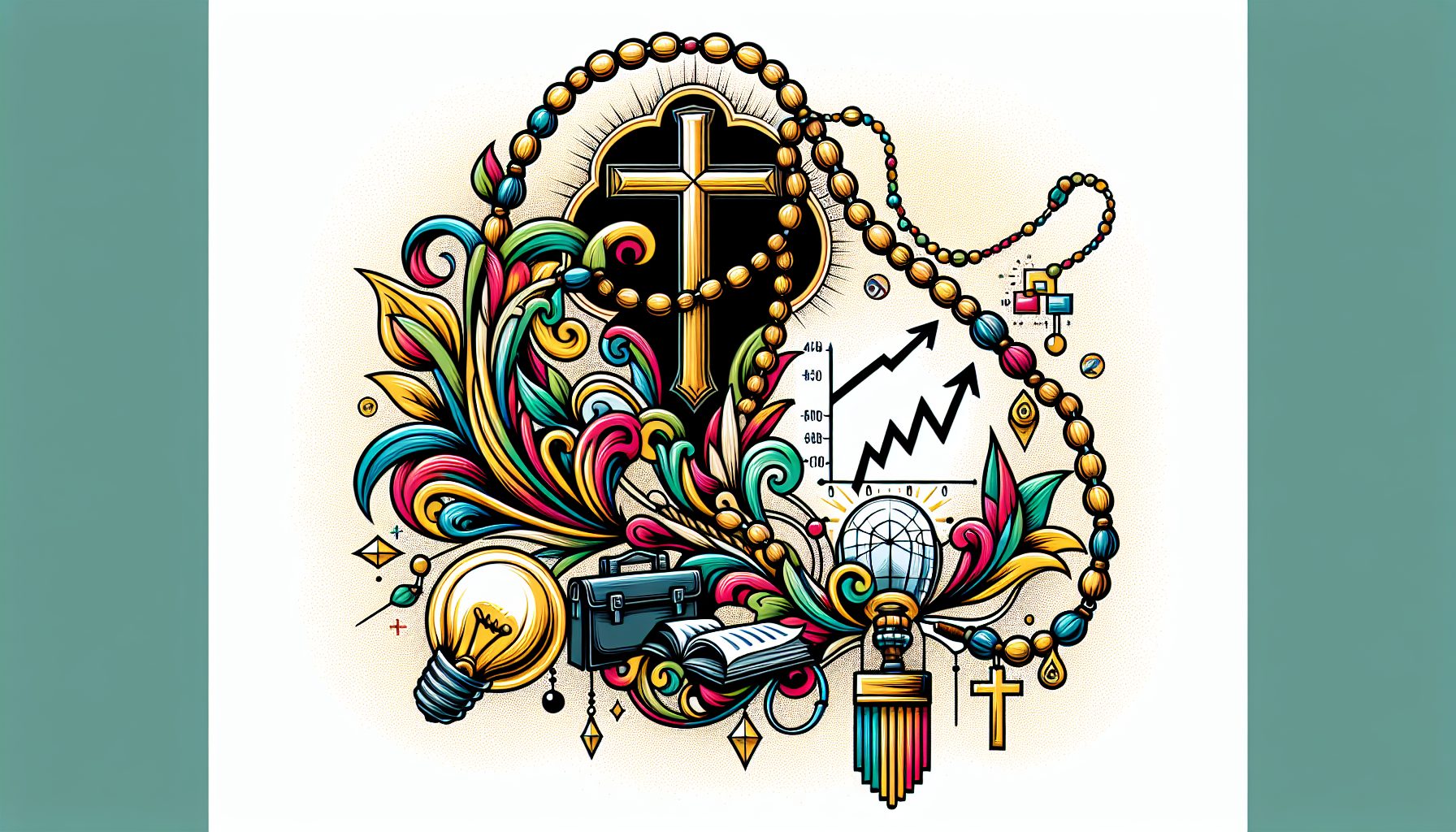

| |

| Figure 3. Determining Cacheability: The figure provides guidelines for determining whether an object should be cacheable. | |

Enterprise web applications have both dynamic and static components. With proper design, they can be architected to deliver the static components from cache and the dynamic components from an origin server. However, the first step is determining what to cache. Figure 3 provides guidelines that can help you determine whether an object is cacheable or dynamic (non-cacheable).

After application architects have differentiated between the cacheable and non-cacheable objects, developers should seek to maximize cache hits while simultaneously avoiding caching the dynamic objects. Here are some best practices:

- Use Cache Control and Expires headers.

- Use the Last Modified Time header.

- Check for support of If-Modified-Since by the web server.

- Investigate the feasibility of using a forward proxy cache for a small site, or leveraging professional help from a CDN company for large-scale enterprise sites.

- Consider using datacenters or co-locations depending on the scalability of the web site.

- Do-it-yourself coding is usually time- and effort-intensive. Depending on the scale of the web site, you may want to consider using open source caching mechanisms such as Squid on your proxy servers.

- Definitely leverage a mix of caching mechanisms for file downloads.

- Ensure that no user- or input-dependent dynamic transactions get cached. Creating a cache map of different objects can help to segregate cacheable from non-cacheable objects.

- Be wary of Content Management Systems (CMSs) that completely ignore the cache headers.

Using Headers for Caching

This section covers the most useful headers for caching purposes.

Controlling Caching

In the HTTP1.1 specification, servers should send a no-cache response for cache-control headers to indicate that the content should not be cached. Both client and server side caches should honor this header to prevent caching of the dynamic content that such headers signify. Most development languages support the headers to control response header values.

| |

| Figure 4. Stop Proxy Server Caching: This request-response flow shows a server returning private to prevent proxy server caching. | |

On the other hand, you can allow caching by returning a server response of public for the cache-control header (absence of the cache-control header can also indicate that the object can be cached). A cache-control header value of private is a special case, meaning that the browser may cache the object locally, but proxy servers should not cache it.

The request-response flow in Figure 4 shows how Google instructs the proxy servers not to cache by using the cache-control header.

Finally, when the server responds with an expires header containing a date/time stamp that expires in the future, browsers can reuse the cached object until the expiration date (see Figure 5).

| |

| Figure 5. Expiring Content: Google’s Gmail server returns an expires header containing the date and time that the cached page should expire. | |

As you can verify, here Gmail responds that the browser can reuse the cached Gmail home page until the date and time specified in the expires header.

Using the Last-Modified Header

| |

| Figure 6. Last Modification Time: The last-modified timestamp lets browsers determine whether to use locally cached content or re-request the content. | |

Browsers use this header to determine the validity of a cached object’s lifetime. When the browser requests this object, the server responds with a last-modified header containing a timestamp specifying when the object was last modified. The next time the user requests the same object, if the current timestamp exceeds the lifespan of the object, or if the user requested a page refresh, the browser sends an if modified since request to the server to determine whether the object has changed. If it has, browser sends a full GET request to fetch the new object and cache it again; otherwise, the browser delivers the object from its cache and updates the last-modified value on the object. Figure 6 shows a working example:

Here, when the browser requests www.yahoo.com, the server responds with a last-modified timestamp. Compare that to the behavior when the page is requested again with an if-modified-since header (see Figure 7), using the timestamp from the last-modified header.

| |

| Figure 7. Checking for Modifications: By sending the if-modified-since header, the server will respond with a value indicating whether the content has been modified since the specified timestamp. | |

In Figure 7, the browser sent a request with the if-modified-since header and the server responded with a 304 code, meaning that the browser can use the cached copy rather than doing a full GET from the server again.

To completely understand the effect of these headers, it’s best if you experiment yourself, using a variety of header combinations to see the behavior. A good tool for analyzing headers is Wfetch.

A Do-It-Yourself Approach

As suggested earlier, the do-it-yourself (DIY) approach is not always the easiest path. In fact, entire CDN companies exist that provide products and solutions to meet various needs. However, if you need to develop a product in-house, one potential aid is Squid. Squid is used as a component in numerous products and by many ISPs. For example, in a Java application, Squid can be used as a proxy to a Tomcat server. Squid provides more than just HTTP caching, but a full discussion of its capabilities is outside the scope of this article. You can see another example of using Squid at Wikimedia.

HTTP Compression

Caching is only one approach to improve web application performance; compression is another key element. HTTP compression compresses content before it gets sent to the client. Compression capabilities are built into both browsers and servers, and implementation requires both to work hand in hand. When your servers deliver compressed content that can be uncompressed at the browser level, you can save valuable bandwidth, reduce costs, and improve response times.

Browsers advertise their support using the header accept-encoding—typically with a value of gzip. Servers respond with a content-encoding header that specifies the encoding of the response data. For example, a server would include a content-encoding header with a value of gzip if the response was compressed using the gzip format.

Servers examine the MIME type of the response, compressing only those types that can benefit from compression techniques, such as text files, HTML, and PDF files. MIME types that don’t gain much benefit from compression include image formats such as gif files (which are already in a compressed format), some video files, and other pre-compressed binary file formats.

| Author’s Note: If you implement caching, any proxy servers should support the same compression as the origin servers. |

Overall, a combination of caching and compression can provide huge cost and performance benefits, consequently improving the scalability of your applications.